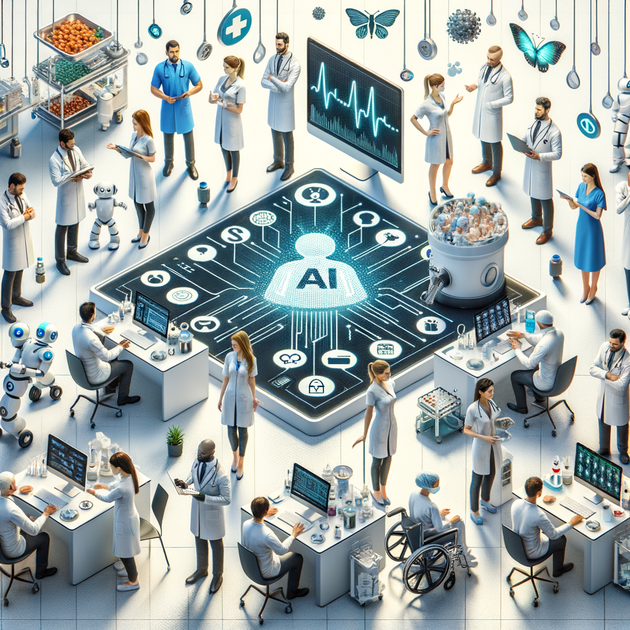

How Responsible AI Testing is Transforming Healthcare

From accelerating drug discovery to identifying new treatments, AI is revolutionizing healthcare. However, to maintain accuracy, fairness, and patient safety, responsible AI testing is crucial. Let’s explore how industry leaders like John Snow Labs are addressing these challenges.

The Revolution of AI in Healthcare

AI is at the forefront of transforming various sectors, and healthcare is no exception. Whether it’s accelerating the drug discovery pipeline or identifying new treatments for chronic diseases, AI applications are making significant inroads. However, with great power comes great responsibility, particularly concerning AI biases and their impact on healthcare.

Understanding AI Bias in Healthcare

AI bias in healthcare can be subtle yet significantly impactful. According to David Talby, CTO of John Snow Labs, these biases often emerge in ways that are not immediately obvious. For instance, an AI system might recommend different tests based on a patient’s name, perceived racial or ethnic identity, or even socioeconomic status.

“A biased AI system in healthcare poses risks not only to patients but also to healthcare providers, who may face regulatory and legal challenges as a result.” – David Talby, CTO, John Snow Labs

Tools for Responsible AI Testing

To address these issues, John Snow Labs has developed sophisticated tools for responsible AI testing. These tools are designed to automate the testing process, focusing on fairness, security, and the ability to identify various biases. With more than 100 types of tests, their open-source LangTest library covers a broad spectrum of bias, from toxicity to political leaning.

Real-World Implications and Legal Risks

Bias in AI systems can lead to severe legal repercussions. Talby mentions that it is easier to prove discrimination in an AI system in court because the bias can be demonstrated by altering variables and observing different outcomes. This capability underscores the importance of continuous and comprehensive AI testing to avoid potential legal pitfalls.

An example Talby shared was a recent study showing that changing a brand name to a generic version of a medication dropped scores by 1-10% on the US medical licensing exam, even when the question wasn’t about the medication itself.

The Role of Regulators in AI in Healthcare

When it comes to regulatory frameworks, Talby supports stringent regulations like Section 1557 of the Affordable Care Act (ACA). However, he emphasizes that these regulations need to constantly evolve to meet the growing complexities of AI in healthcare.

“I think it’s a start, but it needs to evolve much more,” Talby remarked. “Current regulations are akin to early car safety laws— more guidelines than enforcements at this nascent stage.”

Future Directions for AI in Healthcare

John Snow Labs is committed to ensuring that its AI models remain cutting-edge and compliant with evolving standards. By continually updating their models based on the latest research and benchmarks, and offering third-party validation services, they aim to maintain high-quality and reliable AI models for healthcare and life sciences.

With AI becoming increasingly integral to healthcare, it’s vital that industry leaders focus on responsible AI testing to ensure that these technologies are safe and equitable for everyone involved.