How AI and HPC Joined Forces to Unlock New Scientific Frontiers

The journey to scientific discovery is evolving in ways never imagined before. The convergence of Artificial Intelligence (AI) and High-Performance Computing (HPC) is propelling us into new realms of understanding the natural world. But like any evolving journey, new challenges have emerged that we must navigate to maximize the potential of these cutting-edge technologies.

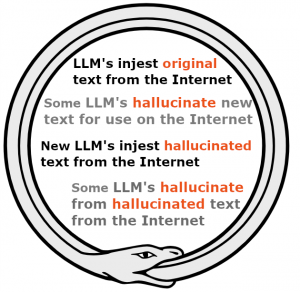

The Ouroboros Dilemma: LLMs Eating Their Own Tail

Imagine a snake eating its own tail—a fitting metaphor for the current pitfalls facing large language models (LLMs). According to a recent paper, AI models face degradation when recursively trained on the data they generated from previous models. Essentially, these models are doomed to collapse unless we adopt new training methodologies.

HPC’s Legacy of Clean Data

While mainstream Generative AI development is scrambling for original data, HPC has a history of producing high-quality numeric models that simulate complex physical systems—from galaxies to proteins and beyond. The clean synthetic data generated through HPC processes serves as an excellent training ground for AI models.

A good example is the Microsoft Aurora weather project. Trained on over a million hours of diverse weather simulations, the Aurora model can predict atmospheric conditions up to 5,000 times faster than traditional numerical forecasting models.

An AI-Augmented Approach

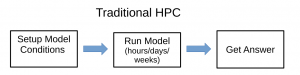

With the integration of AI, HPC models can now generate results faster and more flexibly. Instead of re-computing from scratch, AI-augmented models infer results based on initial conditions, making the process quicker and more efficient.

Real-World Impact: From Weather to Proteins

The flexibility and speed of AI-augmented models have significant real-world applications. For example, the Aurora weather model not only improves weather predictions but also offers remarkable versatility across atmospheric variables, such as temperature, wind speed, and air pollution levels.

A prime example in another field is Google’s AlphaFold, which has revolutionized our understanding of protein folding. By leveraging deep learning, AlphaFold can now predict 3D structures of nearly all human proteins, a feat unimaginable with traditional molecular dynamics alone.

The Need for Robust, Clean Data

Training effective AI models requires vast amounts of clean data. Unlike the messy data often gathered from the internet, HPC-generated synthetic data is meticulously curated, ensuring higher quality inputs for AI training. This clean data mitigates issues like bias and hallucinations in AI models, making the insights derived more reliable and actionable.

Changing Metrics of Success

As AI begins to play a more prominent role in HPC, traditional metrics for measuring supercomputer performance, like the Top500 benchmark, may need to be reconsidered. AI performance benchmarks, such as those set by MLCommons and their MLPerf suite, are becoming increasingly relevant.

A New Era of Scientific Discovery

The synergy between AI and HPC is opening up unprecedented opportunities for scientific and engineering exploration. As we improve our methods and refine our models, we stand on the brink of new insights, discoveries, and innovations that will shape the future.

What are your thoughts on the potential and challenges of AI-augmented HPC? Share your insights and join the conversation!