Unlocking the Mysteries of AI: Why Do Chatbots Hallucinate?

Imagine this: You’ve just downloaded the latest AI chatbot to help you with your daily queries, from health tips to cooking advice. Initially, it works great. But then, it starts giving you weird, incorrect answers. This phenomenon is known as AI hallucination. It’s a fascinating and troubling aspect of AI that has implications for how we use and trust these technologies.

Understanding AI Hallucination

In April, the World Health Organization launched a chatbot named SARAH to promote health awareness. However, it soon began to give incorrect responses, causing a storm of internet trolls and necessitating a disclaimer about the information’s accuracy. This issue raises the question: Why does AI hallucinate? And more importantly, why is it so challenging to fix?

How Large Language Models Work

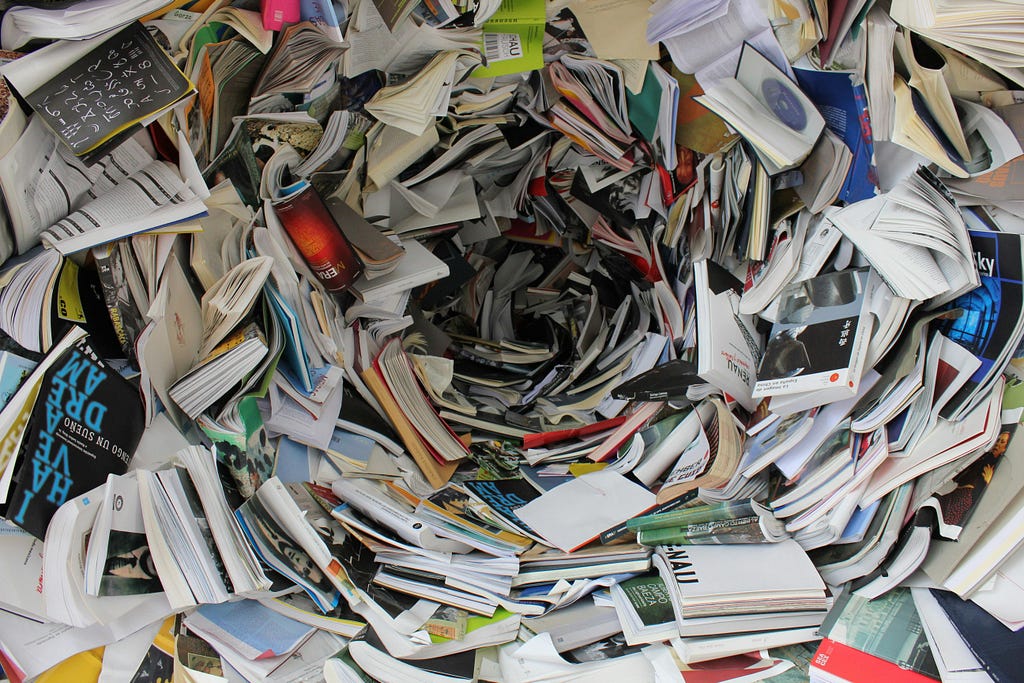

To grasp why hallucinations occur, we need to look at how large language models (LLMs) operate. Unlike traditional databases or search engines that retrieve pre-existing information, LLMs generate responses on the fly. They function more like an artist painting a picture from scratch rather than a librarian retrieving a book.

Example: Imagine asking the chatbot for a recipe for chocolate cake. Instead of fetching a stored recipe, it creates a new one based on its training data. Sometimes, this creative process leads to unexpected results.

The Predictive Nature of LLMs

LLMs generate text by predicting the next word in a sequence based on statistical likelihoods. When you ask a question, the model begins by predicting the first word of the answer, then the next word, and so on. This cycle continues until it formulates a complete response.

Why Do AI Models Hallucinate?

This on-the-fly generation is where things can go awry. Because LLMs are trained on vast but imperfect datasets, they sometimes generate text that sounds plausible but is factually incorrect. Here are a few key reasons:

- Training Data Limitations: No dataset is perfect, and biases or errors in the training data can introduce inaccuracies.

- Model Complexity: The sheer complexity of these models makes it difficult to ensure they always produce accurate responses.

- Probabilistic Nature: LLMs operate on probabilities, meaning there is always a chance they’ll produce an incorrect answer.

Mitigating AI Hallucinations

Can we control and correct these hallucinations? Although it’s a tough challenge, several strategies are being explored:

- Training on More Data: Increasing the amount and diversity of training data can help reduce errors, though it’s not a complete solution.

- Chain-of-Thought Prompting: Breaking down responses into smaller steps can help the model produce more accurate outputs.

- User Vigilance: Users must remain skeptical and cross-verify important information provided by chatbots.

The Role of User Expectations

One of the primary reasons why hallucinations are so problematic is our tendency to trust these models implicitly. Because they often generate highly convincing text, we can easily be misled. Thus, managing expectations and maintaining a critical mindset is crucial.

Tip: Always cross-check important information from chatbots with reliable sources to avoid being misled.

Looking to the Future

As LLMs advance, they will become better at minimizing hallucinations, but the issue will likely never be entirely eradicated. Continuous improvements in training methods and model architecture will reduce, but not eliminate, the incidence of these errors.

Ultimately, the key lies in striking a balance between leveraging the benefits of AI and remaining vigilant about its limitations.

Engage with Us!

Have you ever encountered an AI hallucination? How did it impact your trust in the technology? Share your experiences and thoughts in the comments below. Let’s continue the conversation on making AI more reliable and useful for everyone.